By Enhua Guo, Ocean University of China, Qingdao, China

[bg_collapse view=”button-red” color=”#ffffff” icon=”arrow” expand_text=”What’s a data session report?” collapse_text=”Hide this explanation” ]

Data sessions are a central feature of research in ethnomethodology (EM) and especially conversation analysis (CA). Although data session practices vary across institutions, fields, or even linguistic communities, the core idea remains the same; one or more participants makes a set of empirical data available to a group of people with shared research interests and they all contribute ideas, or alternative perspectives.

Even though the idea is always more or less the same, there are important differences in how people run data sessions, as well as challenges to be overcome. The Data session report will focus on the mechanics of data sessions across various institutional or linguistic contexts. Some of the questions we will be discussing are the following:

CALL FOR CONTRIBUTIONS

Data sessions all over the world foster communities of like-minded researchers and Data session report seeks to bring those communities together, creating room for discussions on how to make the most out of this amazing research practice. We invite you to share your data session experiences, tips or formats, or talk about specific challenges you have faced.

- How many times do people play a recording?

- How do they take turns?

- How are people invited?

- How open is a session and how are people who are not acculturated into data sessions encouraged to participate?

- How do people prepare transcripts?

- How do they deal with varied linguistic capacities in the room?

- How do presenters prepare transcripts for multi-lingual sessions? What kind of transcription conventions do they use? Are 3 line transcripts always effective? Etc.

Please email your contribution to pubs@conversationanalysis.org

[/bg_collapse]

Introduction

Data sessions are an essential means of doing being a member of the CA community, and more importantly, a means to increase the validity and reliability of the research findings. In China, only a very small number of universities and research institutes conduct in-person CA data sessions on a regular basis, including Shanxi University, Ocean University of China, Shandong University, etc. Unfortunately, the outbreak of Covid-19 pandemic in 2020 brought them all to an abrupt halt. However, the passion and need among Chinese CA scholars for data sessions persisted through Covid-19. It was this unstoppable academic zeal in the time of Covid that catalysed the birth of the CA webinar of Happy Data Session in China (HDS). The first HDS was launched on April 23, 2020, by Guodong Yu and Enhua Guo as part of the Conversation Analysis Team of Ocean University of China (OUCA). ((OUCA is a key university research team funded by the Social Science Administration Office of OUC, and the team leader of OUCA is Prof. Guodong Yu. Other founding members include Yaxin Wu, Paul Drew, Kobin Kendrick, Shujun Zhang, Yao Wang, and Enhua Guo. OUCA is well-known in China for its research quality, and OUCA is to launch a Mandarin conversation corpus, including recordings and their transcriptions, (DMC, DIG Mandarin Conversations) very soon. The corpus aims to provide a publicly accessible Chinese conversation corpus for conversation analysts around the world.))

HDS is devoted to three major objectives: (1) to establish a bigger community of Chinese CA scholars; (2) to apply the insights and methods of CA to Chinese data (both mundane and institutional); and (3) to explore the features of Chinese talk-and-body-in-interaction. The most fundamental characterization of CA data session as a research praxis might be to analyse data from the participant’s perspective (also known as the ‘emic approach’). In this regard, data sessions offer a perfect platform for CA practitioners to experience ways of putting themselves into the participants’ shoes in order to avoid analytical subjectivity and increase analytical validity. Although HDS did not arise in the first place from the need to teach CA novices how to do CA, it constitutes an indispensable site to empower them to master CA as a research praxis. Experienced CA experts can use data sessions to teach CA novices how to look at data from the participants’ eyes. In each HDS, we have at least one experienced CA researcher attending, including Guodong Yu, Ni-Eng Lim (Nanyang Technological University, Singapore), Yaxin Wu (Ocean University of China), Wei Zhang (Tongji University, China), etc.

HDS is held on a weekly basis. Normally, each HDS meeting lasts for three hours, but the time limit is not rigid: it can be extended if the participants display a strong tendency to prolong the ongoing data discussion. As all HDS participants are native Chinese speakers and most of the data presented are interactions conducted in Chinese, Chinese is designated as the working language, but it can be flexibly switched to English when international guest scholars are involved. The online platform that we have adopted for HDS is Tencent Meeting (known as VOOV Meeting outside China).

The average number of participants for each HDS meeting is 25-30. One striking characteristic of HDS, as distinguished from many other international webinars, is that all HDS participants prefer an invisible mode. That is, the participants can only see each other’s avatars (with identified real names below), and the microphone status (mute or unmute), but not their faces or other embodied features. Whether unwillingness to show their faces during HDS meeting is related to the implicit, withdrawn ethos in Chinese culture is a relevant topic remaining to be explored. The interactional ecology – where the participants’ visual embodiment is mutually inaccessible – has consequences for its dynamics in turn-taking, especially the management of overlap, silence, and turn completion. We will come back to the meta-discussion of this in Section 3 by focusing on the moment-by-moment silence management in HDS meeting. Before moving on to Section 3, we consider that it might be useful to share how HDS protects the identity of the participants in the data, and the data ownership as well.

How do we protect the identity of the participants in the data and the data ownership in HDS?

When data sessions become online, where the data (video or audio file) and the data transcripts are often shared, people may be concerned about the safety of their data and the identity of the interactants in the data. From the very beginning of HDS, we have given priority to closing this research ethics loophole and have put in place a double-gatekeeping mechanism in safeguarding the data.

Firstly, all HDS participants have to be verified with real names. In case the data are leaked by any HDS participant without the data owner’s permission, the online HDS meeting record can serve as a research ethics proof that would hold the person accountable.

Secondly, besides HDS meetings supported by Tencent (VOOV), we also have a regular WeChat group named Happy Data Session in China. New membership has to be recommended before getting accepted into the group. After securing the membership, each member has to use real names and affiliations as identification. This requirement on the HDS WeChat group membership, in combination with the protocol that each HDS meeting participant should identify themselves with his/her real name, constitutes a double gatekeeping mechanism to protect the data routinely shared by the data presenter in advance of each incipient HDS. By means of this, every HDS meeting participant is checkable and trackable from the WeChat group, and would be held accountable if any violation of the presenter’s data ownership or other research ethics issues occurred.

Apart from the gatekeeping function, HDS WeChat group constitutes a forum where the weekly update on the upcoming HDS is shared, which includes: (1) introducing data topic and data presenter, (2) announcing the online meeting ID and password, and (3) sharing the data and data transcripts, etc. This enables the HDS members to have a preview of the data and decide in advance whether it is relevant and interesting enough for him/her to join. During and after each HDS discussion, the literature referred to by any HDS participant can be shared to WeChat group so that people can engage further with relevant studies. Sometimes, discussion will continue in the forum of the HDS WeChat group after the online HDS meeting is over. In the next section, we will move on to the meta-discussion of how silence is managed moment by moment in HDS.

The management of silence in HDS.

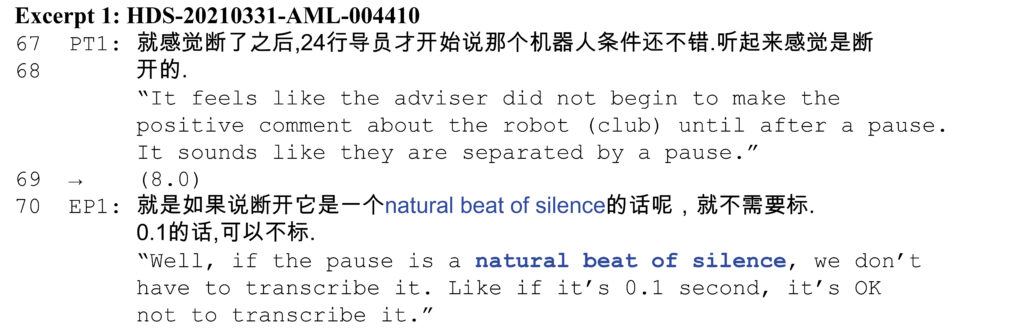

It seems that in HDS the temporal constraint for the allowable, natural limit of inter-turn silence, which is 0.1-0.2 seconds in ordinary conversations (see more on Levinson 2015), is largely relaxed. This is illustrated by Excerpt 1, where an 8-second silence occurs in Line 69.

In Excerpt 1 the two participants didn’t seem to treat the 8-second silence as problematic: PT1 didn’t pursue feedback from co-participants while the EP1 didn’t deliver the response until 8 seconds later.

In fact, even longer but allowable silences (more than 8 seconds) occur in this case of HDS. Their occurrence seems to be concentrated on two major occasions:

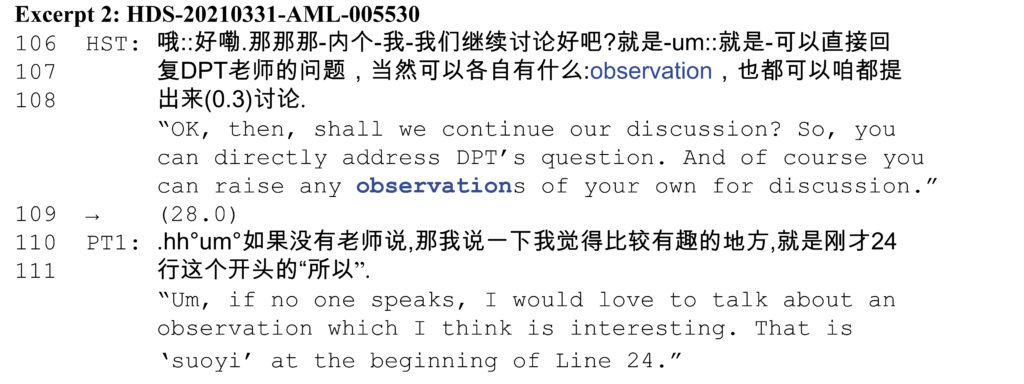

(1) It occurs when the host (HST) attempts to chart the course of HDS or encourage HDS participants to make more contributions. This is shown in Excerpt 2.

From Line 106 to 108, the host called for continued progress of the meeting which had been disrupted repeatedly by one HDS participant’s background noise. He suggested that HDS participants either address the data presenter (DPL)’s question or put forward their own observations. The suggestion projects an open-ended, wide range of responses, with less conditional relevance constraint on the other HDS participants. The 28-second silence in Line 109 after the host’s suggestion is not treated by the HDS participants as problematic. This is shown by the turn design in PT1’s response (Line 110 and 111), where she oriented to the long silence as a time lapse needed for the HDS participants to think and reflect before offering contributions. And the one who comes up with an observation or comment is readily enabled by the lapse to make the first contribution.

According to our retrospective reflection, long silences also occur frequently at the beginning of HDS data sessions. After the data is played online and the ensuing 10-minute, intra-participant data analysis is completed, the host has to call for the comeback of the participants and initiate the interparticipant discussion. But in this specific case, we don’t have the data-based evidence because in the first 30 minutes, the participants are more focused on revising technical issues related to the data transcript.

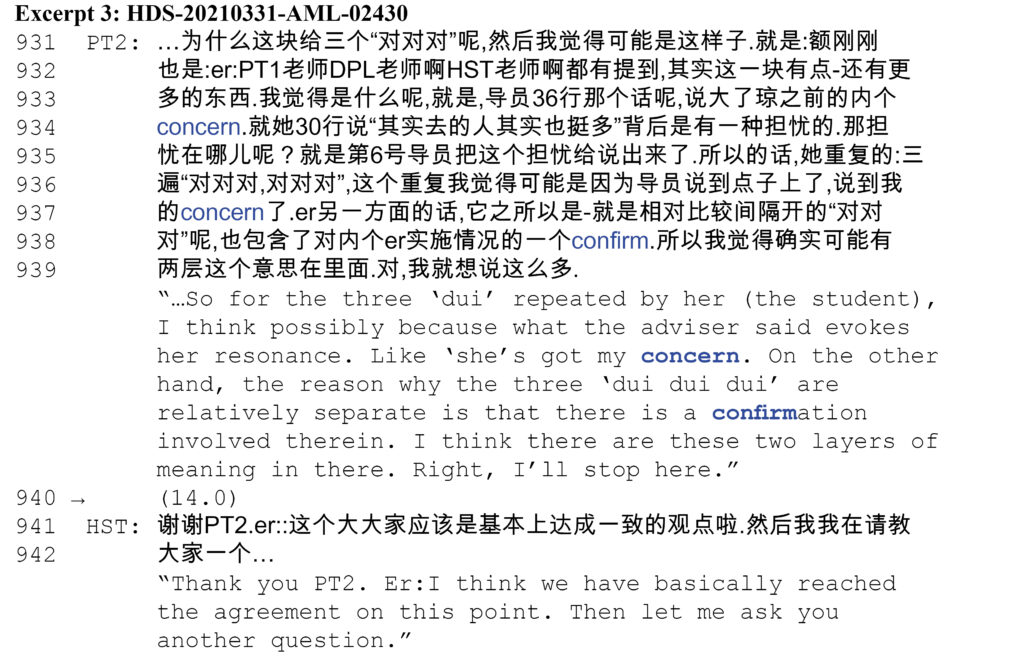

(2) Allowable silence of more than 8 seconds also happens where a concentrated discussion regarding a specific point of interest has come to its conclusion. The longer silence gives the rest of the participants an opportunity to add more comments or observations. If no such contributions are added, the host can wrap it up and throw in another agenda to maintain the progressivity of the data session.

One commonality of the initiating turns in Excerpt 1, 2, 3 is that they implement the same action of telling my side to fish for co-participants’ responses, if any. This action is weaker in the projection force than actions like information/feedback-seeking questions (Excerpt 4) or a request (Excerpt 5). The latter actions project a stronger conditional relevance in that they are more imposing on the recipients’ delivery of responses.

In Excerpt 4, at the end of PT1’s turn, she explicitly launches a question and requests to hear coparticipants’ ideas or feedback on her question (Line 154). However, after a 4-second silence, no interactant responded. In Line 156, the host interfered by thanking PT1’s contribution. Interestingly, the host’s gratitude conveyed in Line 156 is not designed to conclude this interactional sequence initiated by PT1, but rather to maintain the continuity of the sequence, as is evidenced by the slightly rising intonation of this turn and the additional 8 seconds following the turn. For the host, the 8-second silence (Line 157) provides an allowable time limit for coparticipants’ pre-response thinking. This is supported by the host’s turn design in Line 158, where he not only pushed for coparticipants’ thoughts on the question by PT1 but also reformulated PT1’s question more concisely and straightforwardly. Even after this, 6 seconds lapsed before an expert participant (EP2) made a statement that more time was needed for thinking (Line 160). Again, EP2’s response displays that she orients to the 6-second silence in Line 159 as longer than naturally expected. This orientation reflects the conditional relevance established by PT1’s request, the host’s pursuit, and the two prior silences: the three factors accumulate a stronger projection force for co-participants’ timely response.

Excerpt 5 shows how a delayed response to a request is being pursued by the requester (the host).

In Line 402, the host noticed that in the in-meeting chat room PT1, PT2 and PT3 were discussing a topic which was radically different from the one being vocally discussed. Therefore, he issued a request for them to voice out their discussion. However, no one responded after 6 seconds. In Line 404, the host upgraded the request into a more straightforward directive in order to pursue their vocal response. The host’s pursuit displays his orientation to 6 seconds as longer than expected.

Note the analysis above is arrived at entirely within the analytical limit of this specific case of HDS interaction. Whether its generalizability and validity work for other HDS interactions, let alone other CA data sessions, awaits further verification across a larger scale and wider range of CA data session samples.

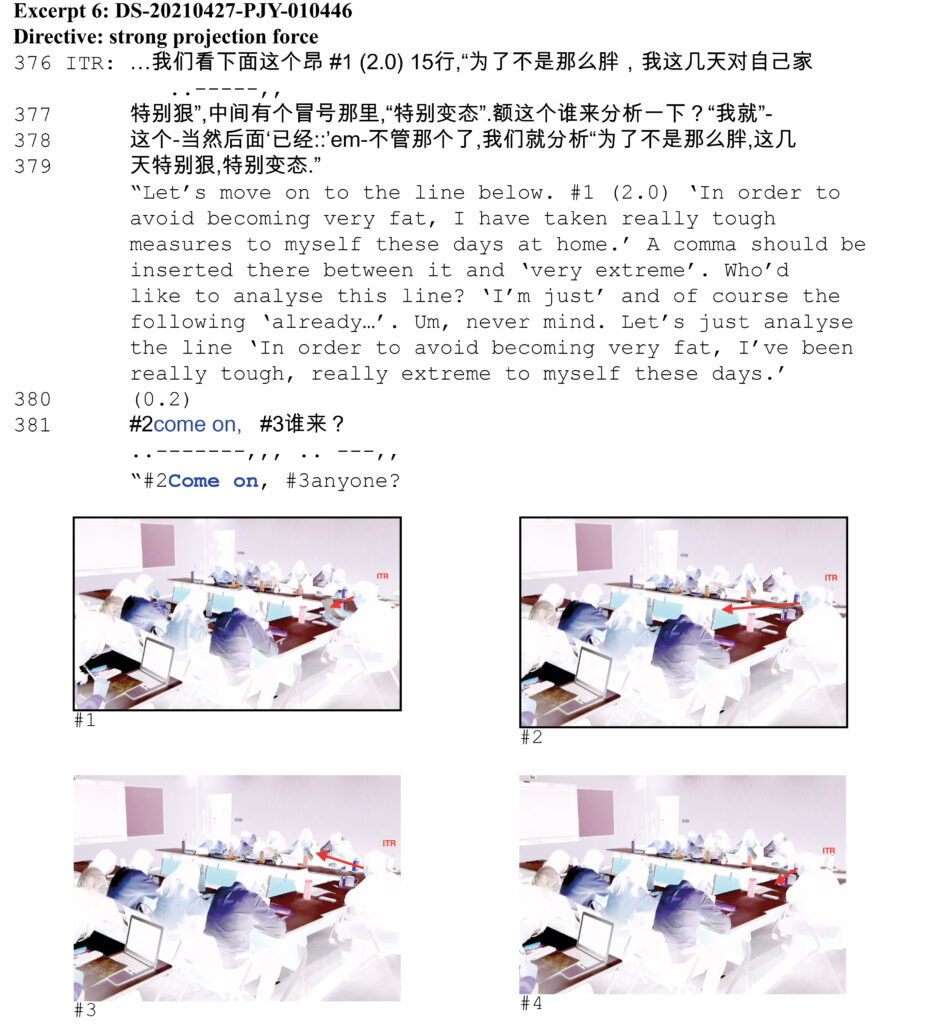

Weeks after writing up this piece, we have video-recorded two in-person CA data sessions occurring at OUC in order to make a comparative study. We find that silences in in-person data sessions are by and large shorter than those in HDS. In the two video-recorded data session cases (each lasts for 2 hours), all inter-turn silences are no more than 4 seconds. One plausible reason that we can think of is that in face-to-face data session interactions, the availability of a full range of embodied, multimodal resources allows the interactions to conducted more adequately and smoothly. There is, however, one deviant case whereby the silence lasts about 10 seconds, as is shown in Excerpt 6.

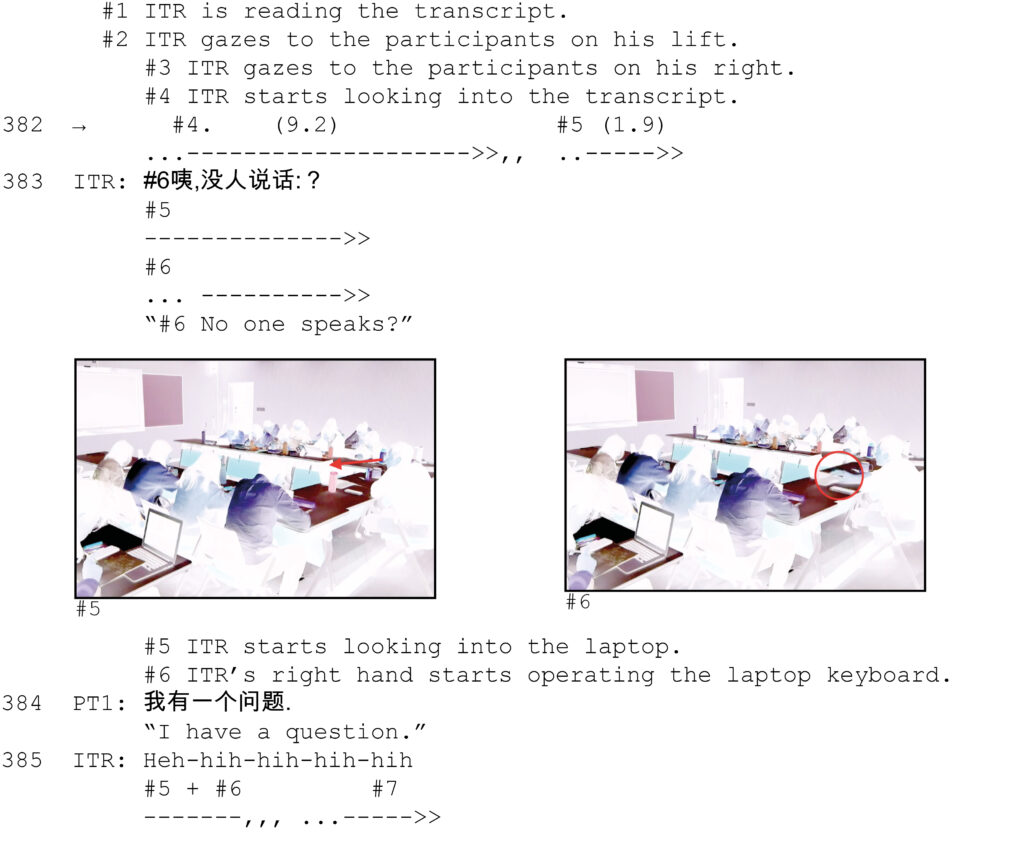

In Excerpt 6, the CA instructor (ITR), who is an established CA expert, was hosting the data session. Although some participants were joining the data session virtually via the instructor’s laptop, most interactions occurred among physically co-present participants. From Line 376-379, the instructor called for attention on a specific line in the transcribed handout and made an open-ended request (“Who’d like to analyse this line?”) to seek for a specific volunteer to analyse the line in question. Meeting with no immediate response (evidenced by 0.2-second gap in Line 380) from the participants, the instructor pursued with a code-switched expression (“come on”) and a shortened reformulation of the request (“anyone?”). In fact, the pursuing was implemented in a multimodal gestalt: the verbal expression (“come on”) occurred simultaneously with the instructor’s gaze directed to the data session participants on his left side (#2), which in turn was immediately followed by gaze shift to his right (#3). Suppose that no verbal practice had occurred in Line 381, the instructor’s gaze shift from his left to his right by itself could also enact a nonverbal pursuit of response. However, despite this multimodal pursuit of response, a 11-second silence occurred in Line 382. Such long silences in in-person data sessions are so rare and deviant that they should be given special attention. By looking into the nonverbal conduct of the instructor along with this long silence, we have found that the instructor suddenly withdrew his gaze from his left and started rereading the transcript again (#4). This embodied action has largely mitigated the projection force of his open-ended request and his pursuing appeal for a volunteering participant. Also, by lowering his eye gaze and reading the transcript, the instructor himself was doing “thinking over” the line in question and therefore tacitly allowing the other participants more time to process the line before responding. During the first 9.2 seconds, the instructor did not pursue response from all the rest participants. After that, he began looking into his laptop (#5) to check whether any virtual participants would volunteer to talk. ITR’s eye gaze shift was coordinated with his right hand touching the keyboard (#6) and operating the ongoing Tencent webinars. It was not until this moment that the instructor pursued again for co-participants’ response (Line 383). From this interactional episode, it is obvious that the instructor’s embodied actions can play a role in modifying the interactional trajectory projected by his previous verbal talk. Although the conditional relevance of the initiating action still exists, the projection force is mitigated by relaxing the temporality of the response delivery.

Excerpt 6 shows that a long inter-turn silence (over 4 seconds) did occur in a conditionally relevant position in face-to-face data session interactions, but a multimodal investigation of its occurrence indicates that its occurrence was in sync with a data-session-relevant embodied action. This embodied action undermines the temporal constraint of the delivery of the responding action. Notwithstanding the prolonged silence, the embodied action by no means interrupted the progressivity of the data session, because the instructor’s rereading the transcript on its own is still part of doing a data session. Here, the instructor and the rest of the participants seem to be tacitly doing “thinking together over” the line in question, which is perfectly appropriate and necessary for enabling further discussions. It seems that the very notion of data session progressivity needs to be redefined when it moves from the online context to the in-person context, especially when the former is characterized by a voice-only mode. In in-person data sessions, the progressivity is not oriented as interrupted or suspended when the participants are engaged in various non-verbal yet still data-relevant activities, such as reading the transcripts, writing down notes, murmuring opinions with neighbouring co-participants.

To summarize, based on the three cases (one screen-recorded online HDS and two video-recorded off-line CA data sessions), it can be tentatively argued that longer silences tend to happen more often in online CA data sessions than offline ones. Also, progressivity in in-person CA data sessions should be treated as a multimodal, embodied construct, rather than being simply characterized by the parameter of inter-turn silences. In contrast, the data session progressivity in webinars (such as HDS) is more dominantly marked by lack of inter-turn silences, especially those conducted in an invisible mode. Since no video channels are available for mutual monitoring of each other’s embodied actions, the silences are felt as more striking therein. Following this line of reasoning, it can be inferred that in CA data sessions online silences draw participants’ orientation more easily than offline silences, and therefore are more likely to be avoided. However, our findings indicate that just the opposite circumstance is true. One possible explanation is that in co-present data session interactions, the availability of fully embodied, multimodal practices allows the interactions to be conducted more adequately and smoothly. In addition, there are also factors that might possibly account for the extended inter-turn silences witnessed in HDS:

(1) The unavailability of mutual visibility has rendered impossible the role of eye gaze and other embodied resources in turn-taking organization;

(2) The institutional nature of CA data sessions, a type of in-depth data analysis, determines that participants be allowed more time to analyse and think before responding;

(3) The availability of multiple participants to respond to a prior participant’s turn may not only lead to overlaps (when more than one coparticipants respond simultaneously) but also extended gaps when the initiating action has a weak projection force.

(4) Technological affordances, provided by ZOOM, or TENCENT, etc., also have an impact on the progressivity of turn-taking in webinars. In comparison with face-to-face data sessions, ZOOM or TENCENT mediated webinars are more sensitive to overlapping sounds. In a webinar environment, any overlapping sound (be it another participant’s talk or background noise) leads to a more perceptible sense of disturbance to the ongoing interaction than in the off-line environment. ZOOM or TENCENT mediated webinar talk, once overlapped to whatever extent, is made unintelligible not only among the speakers themselves, but also to other webinar participants. To exploit this rigorous “one speaker at a time” feature of ZOOM or TENCENT, most Webinar participants have adapted a practical participation mode of keeping their microphone muted while another participant is speaking and only unmuting it on when their turn to speak comes. There are two consequences: firstly, tapping the microphone icon before being able to speak may extend turn-transitional silence. Sometimes, speakers might forget to unmute their micro-phone even after talking for a while. But of course, there are also occasions where the next speaker can anticipate the possible completion point of the current turn and mute him/herself in advance so that the ZOOM or TENCENT-mediated gap can be minimized. Secondly, and perhaps more importantly, this overlapping-sensitive feature of ZOOM or TENCENT has significantly reduced the amount of micro-sequential recipient responses such as continuers, acknowledgement tokens. As the temporality of such responses often matches the microphone unmuting time, their occurrence might not fall upon inter-TCU locations, hence causing interruption to the current speaker’s talk. To make this even worse in HDS is the participants’ preference of invisible participation, which makes many quasi-simultaneous, embodied responses such as nodding simply unavailable. All these practices play an important role in the concerted, emergent production of current turn (Goodwin 1979, 1980; Streeck, Goodwin & LeBaron 2011; Deppermann & Streeck 2018; Mondada 2018). The unavailability of them inevitably has a negative impact on the progressivity of the interaction.

References

Deppermann, Arnulf, & Jürgen Streeck (eds). 2018. Time in embodied interaction: Synchronicity and sequentiality of multimodal resources. John Benjamins Publishing Company.

Goodwin, Charles. 1979. The Interactive Construction of a Sentence in Natural Conversation. In Psathas, George (Ed.) Everyday Language: Studies in Ethnomethodology, 97–121. New York: Irvington.

Goodwin, Charles. 1980. Restarts, Pauses, and the Achievement of Mutual Gaze at Turn-Beginning. Sociological Inquiry 50 (3–4): 272–302.

Levinson, Steven C., and Francisco Torreira. 2015. Timing in Turn-Taking and its Implications for Processing Models of Language. Frontiers in Psychology 6: 1-17.

Mondada, Lorenza. 2018. Multiple Temporalities of Language and Body in Interaction: Challenges for Transcribing Multimodality. Research on Language and Social Interaction 51 (1), 85–106.

Streeck, Jürgen, Goodwin, Charles & Curtis LeBaron (eds). 2011. Embodied Interaction: Language and Body in the Material World. Cambridge: Cambridge University Press.